In the past, if a criminal wanted to steal from someone’s home or a business they had to be daring enough to break in. Now, a poorly designed Internet of Things (IoT) device can potentially provide hackers with easier opportunities. Smart voice assistants, which are an increasingly important part of the consumer IoT market, are becoming an attractive target for hackers. Fortunately, the manufacturers of these products can help keep attackers out by building in a hardware Root of Trust and implementing security best practices.

A Quicker and Easier Way to Connect

In recent years voice assistants have become more popular, with more people embracing the technology that enables them to search, stream and control their connected devices using their voice. According to estimates, up to 40% of people in the United States use a voice assistant at least once a month and are increasingly dependent on these devices in their everyday lives. Smart speakers, one type of voice-activated device, are the fastest-growing consumer tech product in history.

Smart speakers are quickly becoming the heart of smart homes. Other IoT products, including lighting, thermostats, entertainment, and security devices, integrate with the leading brands of assistants. In fact, a survey by professional services firm, PwC, found 89% of consumers who were shopping for a smart home device were “influenced by its compatibility with their voice assistant.”

How Do Smart Speakers and Voice Assistants Work?

The technology that powers these devices works in one of three ways:

- Traditional smart speakers rely on cloud-based services – they need an internet connection to interpret a user’s request in real-time. If there is an internet or network outage they are otherwise redundant.

- Other devices process information locally or on the product itself, in other words, at the edge.

- Hybrid solutions offer the best of both worlds – they process spoken commands locally but use the cloud if necessary, for example, to perform an internet search. In these products, voice commands can be analyzed at the edge or in the cloud, depending on complexity, latency, and privacy requirements.

Smart speakers and other voice assistants are getting even smarter over time as manufacturers continue to evolve voice-enabled technologies. However, to ensure these devices continue to develop and be adopted at scale by consumers, it’s important that we build and maintain trust in these devices- particularly in regard to the security and privacy implications of these new functionalities.

Smart Speaker and Voice Assistant Security Concerns

Some consumers are more hesitant about the development of smart speakers and have concerns about the security of the devices. In a survey conducted by Northstar, almost half of respondents listed data privacy and security as one of the main barriers to more widespread adoption of the technologies: Some consumers fear someone may be listening to what is being said to or near the device, with 38% of respondents to PwC’s survey saying just that.

So, how real are these concerns?

The nature of smart speakers means that they’re monitoring audio streams from the speaker microphone to detect user commands, which are otherwise known as “wake words”. Wake words have been controversial in the past, with up to 91% of users reporting smart speakers being activated incorrectly by falsely hearing a wake word, therefore it’s clear the public want their privacy to be respected. Privacy protections are also essential when the voice assistant is carrying out commercial features, such as when it is used for purchasing online.

Secondly, perhaps one of the most worrying aspects of the technology is the danger that compromised smart speakers, integrated into a smart home, can give hackers access to other IoT devices, for example, smart lights or smart thermostats. In the PwC survey, 44% of respondents said they used their voice assistant to control other smart home devices, highlighting how real this threat could be – particularly in homes using connected security devices such as smart security cameras or smart door locks.

Although there are still limited examples of attacks on smart speakers, these concerns are not misplaced. A study by academics at the University of Salford, looking at the security and privacy challenges of voice assistants, found that attacks on voice assistants were becoming more commonplace and evolving in ways that made them easier for hackers to carry out.

Threat Modeling: Understanding the Assets and Risks of a Smart Speaker

If the demand for smart speakers and voice assistants is to continue to grow, people must be able to trust the devices and the technology that underpins them. Following the PSA Certified methodology, the first step in security design is to complete a threat model. Understanding the assets of the system helps us to understand the threats, and consequently what components a smart speaker may need to be “secure”. In the case of the smart speaker, some of the assets include the platform data (such as its unique identity, firmware, and event logs), its user data (such as the audio data exchanged on the speaker) and any specific configuration or credential information.

Once you’ve identified the assets, the manufacturer of a voice assistant can identify the most common ways a hacker might attack a device. Examples for a smart speaker might include Impersonation Attacks, Man-In-The-Middle Attacks, Firmware abuse or Repudiation.

If you’re looking for help with the threat modeling process, PSA Certified has recently released a new Threat Model and Security Analysis (TMSA) document which specifically addresses the security concerns of smart voice assistants. It is also issued with a creative commons license so that it can be adapted to guide your own analysis of a product. This example strips the complexity out of threat modeling, providing a standardized process that can be adapted to suit your IoT device. In addition, the PSA Certified Foundational Training Course includes a chapter dedicated to threat modeling that covers terminology, definitions, and the benefits of threat modeling. Together, these resources are simplifying the threat modeling process for OEMs ensuring they take a systematic effective approach, without dedicating unnecessary resources or time to the process.

How to Build Trust in Smart Speakers and Voice Assistants

With the assets and threats in mind, what are some of the key steps you should take to make smart speakers as secure as possible? First, it’s crucial that a connected device is built on a strong foundation. A Root of Trust (RoT) is a foundational security component that completes a set of implicitly trusted functions and provides a source of integrity for the entire system or device. By protecting the integrity of a device, a RoT provides assurance and can help build trust into connected devices, including a voice assistant. The RoT plays a pivotal role in enabling the Security Functional Requirements (SFRs) that a threat model would outline.

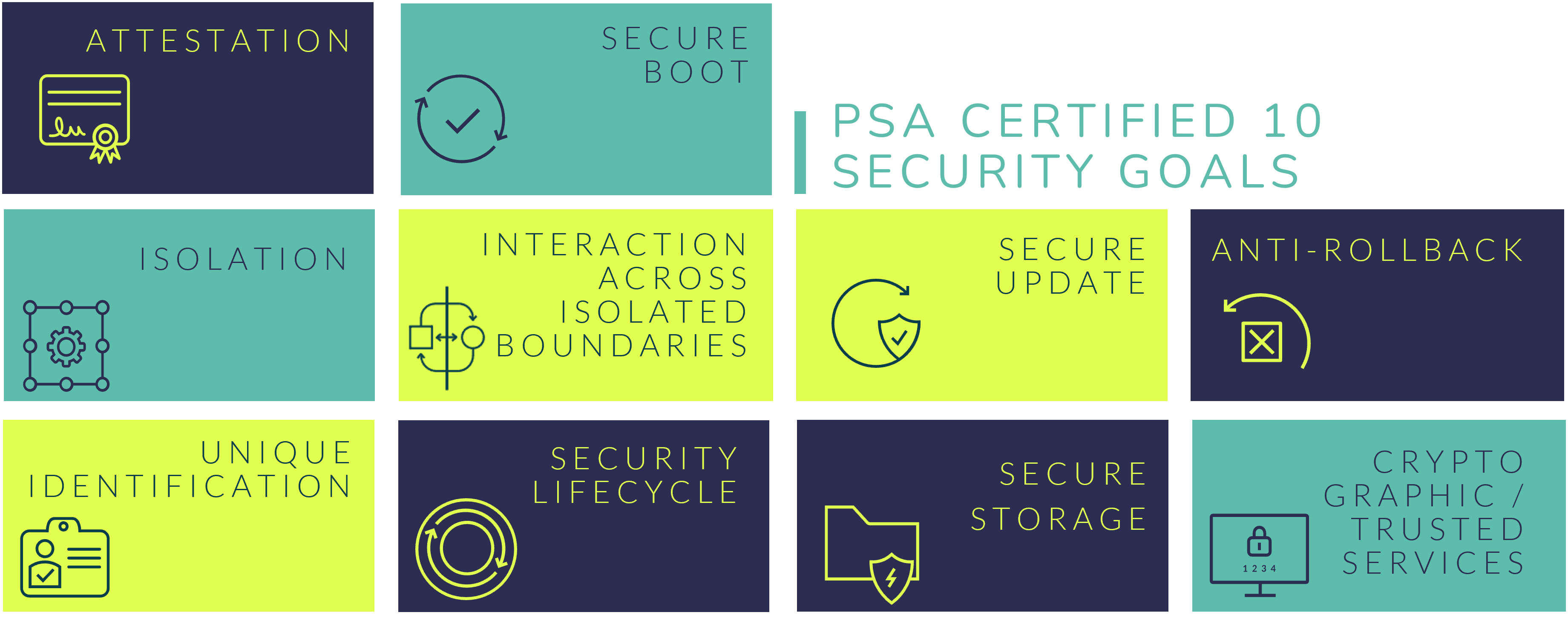

We won’t cover every possible SFR for smart speakers here, but some examples include providing a unique identity that can be attestable, providing secure storage to prevent private data from being leaked, enabling secure boot so that only authorized software can be executed in the device and having a secure update process. Many of these SFRs are also included in the PSA Certified 10 security goals, that outline high level security functions that should be implemented into every connected device.

At the very least, smart speakers need to have Software Attacker Resistance, which involves providing isolation between the secure elements and insecure elements so that an attacker is unable to run code, compromise functional requirements or compromise the integrity and confidentiality of the application. To ensure protection from software attacks, a manufacturer would want to select a PSA Certified Level 2 silicon chip. A chip certified to this level has undergone independent penetration testing to establish if the RoT meets the nine security requirements of the PSA Certified Level 2 PSA-RoT protection profile, showcasing an ability to protect against scalable software attacks.

PSA Certified: A Security Framework to Protect Smart Speakers and Voice Assistants

By providing free editable resources, such as threat models, and a comprehensive framework, PSA Certified is democratizing IoT security making it quicker, more cost-effective and straightforward to build the right level of protection into a device. By facilitating the implementation of best practice IoT security, PSA Certified is helping device manufacturers build trust in connected devices, ensuring the widespread adoption of the IoT continues and securing the future of digital transformation.

With nearly 70 partners and over 130 PSA Certified products, join our award-winning IoT security ecosystem and ensure your next IoT innovation is secure by design.

Next Steps

Learn more about PSA Certified and how the simple four-step framework can help you implement best practice security into your devices.